Leiden University Libraries & Elsevier Seminars on Reproducible Research: Wrap-up Seminar 1

The first seminar in the Leiden University Libraries & Elsevier seminars on Reproducible Research concentrated on the overall rationale of reproducible research.

Did you miss this seminar or would you like to watch some parts (again)?

You can watch the recording of the seminar on this playlist: https://www.youtube.com/playlist?list=PL-vTxWFyVBpEllXo6thA8ZQ_OpYkThIfQ

If you have not yet registered for the remaining two seminars, you can register here. Please subscribe to the UBLeiden Youtube channel not to miss the wrap-up videos for seminars 2 & 3.

WRAP-UP Seminar 1

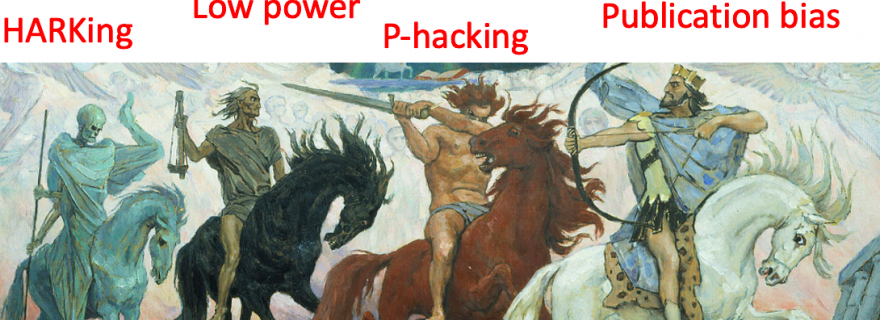

Dorothy Bishop firstly explained some of the central challenges. She explained that, in disciplines such as biomedicine, pharmaceutics and psychology, there are indeed large numbers of studies whose findings cannot be replicated. She identified four main causes, which she referred to as the four horsemen of irreproducibility. P-Hacking, firstly, is a process in which researchers perform many statistical tests, only to identify those tests that yield statistically significant results. Publication bias, secondly, is the tendency of authors to write primarily about positive results and to obscure null or negative results. HARKing or ‘Hypothesis after results are known’, thirdly, arises from a failure to distinguish clearly between research that tests hypotheses and research that generates hypotheses. Low statistical power, finally, is the problem that studies may also produce false negatives, often because of small sample sizes. Such issues with irreproducibility can obviously be very problematic for other scientists, and especially for early career researchers. They may waste time and resources trying to build on findings that are not solid. It also causes problems for society. Pharmaceutical companies developing new drugs and new treatments, for instance, need to make absolutely sure that the findings they work with are reliable and robust, to avoid potential health risks.

Brian Nosek focuses more specifically on some of the ways in which we can address the challenges that were outlined in the first lecture. Obviously, we need to encourage specific forms of behaviour, but, next to this, the current system of scholarly communication and, more particularly, the way in which researchers are rewarded and incentivized, need to change as well. At the moment, researchers are mainly rewarded for getting their findings published, and not for getting their findings right. To foster reproducibility, scientists should be stimulated to share their data and all the other relevant materials as openly and as broadly as possible. Nosek also explained that the problems surrounding publication bias can be alleviated by stimulating researchers to pre-register their studies. This essentially means that researchers submit their questions, their research design and the methods to a registry, in advance of the actual research. This pre-registration can then be reviewed by peers, who can assess whether the question is relevant and whether the proposed methodology is sound. In the ideal situation, there is also a publisher that accepts the results of this pre-registered study, regardless of the outcome. Nosek also explained that to stimulate researchers to share their data, to document the methods and to pre-register their planned studies, we need to make sure that all the necessary actions are possible, easy, normative, rewarding and ultimately, required. To make the process of sharing data normative, for instance, journals can award badges to papers that include a link to the raw data. Nosek showed a graph indicating that, in the Psychological Science journal, the number of open data sets increased quite dramatically after the journal started to award badges. The shift towards reproducible science demands a transformation of the current norms, conventions and incentives, and such a cultural change ultimately demands a concerted effort by all the stakeholders in the field of scholarly communication, including funders, universities and publishers.

Daniël Lakens monitored the panel discussion. The discussion firstly focused on the value of incremental research, or of research building on the results of earlier studies. A research project rarely delivers the final word about a topic, so researchers should feel incentivised to delve into a problem deeply and to investigate the phenomena they are interested in from many different angles. Such incremental research is often made difficult, however, by the fact that funders often expect innovative and ‘ground-breaking’ research, and because of the fact that they typically fund projects for a limited amount of time only. The panellist also stressed the value of collaboration, not only among publishers, funders and universities but also among researchers in different disciplines. If we actually demand a transition towards open science, we should not keep pointing fingers at others. Instead, we should accept that we have a shared responsibility and that we can all contribute to the change that is needed. The transition towards open science appears to take place rapidly in some fields, while it appears to be more difficult in certain other disciplines. At a more abstract level, however, many of the goals and the challenges are actually very similar. We should encourage more cross-disciplinary exchanges so that the different communities can actually learn from each other’s experiences.